The logic of economic models Analogue-economy models may picture Galilean thought experiments or they may describe credible worlds. In either case we have a problem in taking lessons from the model to the world. The problem is the venerable one of unrealistic assumptions, exacerbated in economics by the fact that the paucity of economic principles with serious empirical content makes it difficult to do without detailed structural assumptions. But the worry is not just that the assumptions are unrealistic; rather, they are unrealistic in just the wrong way. Nancy Cartwright One of the limitations with economics is the restricted possibility to perform experiments, forcing it to mainly rely on observational studies for knowledge of real-world economies.

Topics:

Lars Pålsson Syll considers the following as important: Economics, Theory of Science & Methodology

This could be interesting, too:

Lars Pålsson Syll writes Schuldenbremse bye bye

Lars Pålsson Syll writes What’s wrong with economics — a primer

Lars Pålsson Syll writes Krigskeynesianismens återkomst

Lars Pålsson Syll writes Finding Eigenvalues and Eigenvectors (student stuff)

The logic of economic models

Analogue-economy models may picture Galilean thought experiments or they may describe credible worlds. In either case we have a problem in taking lessons from the model to the world. The problem is the venerable one of unrealistic assumptions, exacerbated in economics by the fact that the paucity of economic principles with serious empirical content makes it difficult to do without detailed structural assumptions. But the worry is not just that the assumptions are unrealistic; rather, they are unrealistic in just the wrong way.

One of the limitations with economics is the restricted possibility to perform experiments, forcing it to mainly rely on observational studies for knowledge of real-world economies.

But still — the idea of performing laboratory experiments holds a firm grip of our wish to discover (causal) relationships between economic ‘variables.’  If we only could isolate and manipulate variables in controlled environments, we would probably find ourselves in a situation where we with greater ‘rigour’ and ‘precision’ could describe, predict, or explain economic happenings in terms of ‘structural’ causes, ‘parameter’ values of relevant variables, and economic ‘laws.’

If we only could isolate and manipulate variables in controlled environments, we would probably find ourselves in a situation where we with greater ‘rigour’ and ‘precision’ could describe, predict, or explain economic happenings in terms of ‘structural’ causes, ‘parameter’ values of relevant variables, and economic ‘laws.’

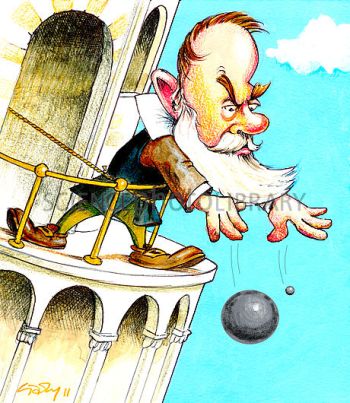

Galileo Galilei’s experiments are often held as exemplary for how to perform experiments to learn something about the real world. Galileo’s experiments were according to Nancy Cartwright (Hunting Causes and Using Them, p. 223)

designed to find out what contribution the motion due to the pull of the earth will make, with the assumption that the contribution is stable across all the different kinds of situations falling bodies will get into … He eliminated (as far as possible) all other causes of motion on the bodies in his experiment so that he could see how they move when only the earth affects them. That is the contribution that the earth’s pull makes to their motion.

Galileo’s heavy balls dropping from the tower of Pisa, confirmed that the distance an object falls is proportional to the square of time and that this law (empirical regularity) of falling bodies could be applicable outside a vacuum tube when e. g. air existence is negligible.

The big problem is to decide or find out exactly for which objects air resistance (and other potentially ‘confounding’ factors) is ‘negligible.’ In the case of heavy balls, air resistance is obviously negligible, but how about feathers or plastic bags?

One possibility is to take the all-encompassing-theory road and find out all about possible disturbing/confounding factors — not only air resistance — influencing the fall and build that into one great model delivering accurate predictions on what happens when the object that falls is not only a heavy ball but feathers and plastic bags. This usually amounts to ultimately state some kind of ceteris paribus interpretation of the ‘law.’

Another road to take would be to concentrate on the negligibility assumption and to specify the domain of applicability to be only heavy compact bodies. The price you have to pay for this is that (1) ‘negligibility’ may be hard to establish in open real-world systems, (2) the generalisation you can make from ‘sample’ to ‘population’ is heavily restricted, and (3) you actually have to use some ‘shoe leather’ and empirically try to find out how large is the ‘reach’ of the ‘law.’

In mainstream economics, one has usually settled for the ‘theoretical’ road (and in case you think the present ‘natural experiments’ hype has changed anything, remember that to mimic real experiments, exceedingly stringent special conditions have to obtain).

In the end, it all boils down to one question — are there any Galilean ‘heavy balls’ to be found in economics, so that we can indisputably establish the existence of economic laws operating in real-world economies?

As far as I can see there some heavy balls out there, but not even one single real economic law.

Economic factors/variables are more like feathers than heavy balls — non-negligible factors (like air resistance and chaotic turbulence) are hard to rule out as having no influence on the object studied.

Galilean experiments are hard to carry out in economics, and the theoretical ‘analogue’ models economists construct and in which they perform their ‘thought-experiments’ build on assumptions that are far away from the kind of idealized conditions under which Galileo performed his experiments. The ‘nomological machines’ that Galileo and other scientists have been able to construct have no real analogues in economics. The stability, autonomy, modularity, and interventional invariance, that we may find between entities in nature, simply are not there in real-world economies. That’s are real-world fact, and contrary to the beliefs of most mainstream economists, they won’t go away simply by applying deductive-axiomatic economic theory with tons of more or less unsubstantiated assumptions.

By this, I do not mean to say that we have to discard all (causal) theories/laws building on modularity, stability, invariance, etc. But we have to acknowledge the fact that outside the systems that possibly fulfil these requirements/assumptions, they are of little substantial value. Running paper and pen experiments on artificial ‘analogue’ model economies is a sure way of ‘establishing’ (causal) economic laws or solving intricate econometric problems of autonomy, identification, invariance and structural stability — in the model world. But they are pure substitutes for the real thing and they don’t have much bearing on what goes on in real-world open social systems. Setting up convenient circumstances for conducting Galilean experiments may tell us a lot about what happens under those kinds of circumstances. But — few, if any, real-world social systems are ‘convenient.’ So most of those systems, theories and models, are irrelevant for letting us know what we really want to know.

To solve, understand, or explain real-world problems you actually have to know something about them — logic, pure mathematics, data simulations or deductive axiomatics don’t take you very far. Most econometrics and economic theories/models are splendid logic machines. But — applying them to the real world is a totally hopeless undertaking! The assumptions one has to make in order to successfully apply these deductive-axiomatic theories/models/machines are devastatingly restrictive and mostly empirically untestable– and hence make their real-world scope ridiculously narrow. To fruitfully analyse real-world phenomena with models and theories you cannot build on patently and known to be ridiculously absurd assumptions. No matter how much you would like the world to entirely consist of heavy balls, the world is not like that. The world also has its fair share of feathers and plastic bags.

The problem articulated by Cartwright (in the quote at the top of this post) is that most of the ‘idealizations’ we find in mainstream economic models are not ‘core’ assumptions, but rather structural ‘auxiliary’ assumptions. Without those supplementary assumptions, the core assumptions deliver next to nothing of interest. So to come up with interesting conclusions you have to rely heavily on those other — ‘structural’ — assumptions.

Let me just take one example to show that as a result of this the Galilean virtue is totally lost — there is no way the results achieved within the model can be exported to other circumstances.

When Pissarides — in his ‘Loss of Skill during Unemployment and the Persistence of Unemployment Shocks’ QJE (1992) —try to explain involuntary unemployment, he do so by constructing a model using assumptions such as e. g. ”two overlapping generations of fixed size”, ”wages determined by Nash bargaining”, ”actors maximizing expected utility”,”endogenous job openings”, and ”job matching describable by a probability distribution.” The core assumption of expected utility maximizing agents doesn’t take the models anywhere, so to get some results Pissarides have to load his model with all these constraining auxiliary assumptions. Without those assumptions the model would deliver nothing. The problem? There is no way the results we get in that model would happen in reality! The model is not a Galilean thought-experiment. Given the set of constraining assumptions this or that happens. But change only one of these assumptions and something completely different may happen. That’s not the kind of knowledge we are looking for. We want to know what happens to unemployment in general in the real world, not what might possibly happen in a model given a constraining set of known to be false assumptions. This should come as no surprise. How that model with all its more or less outlandishly looking assumptions ever should be able to connect with the real world is, to say the least, somewhat unclear. As Cartwright has it, the assumptions are not only unrealistic, they are unrealistic “in just the wrong way.”

So why do mainstream they keep on pursuing this modelling project?

Mainstream economists want to explain social phenomena, structures and patterns, based on the assumption that the agents are acting in an optimizing (rational) way to satisfy given, stable and well-defined goals.

The procedure is analytical. The whole is broken down into its constituent parts so as to be able to explain (reduce) the aggregate (macro) as the result of interaction of its parts (micro).

Modern mainstream economists ground their models on a set of core assumptions (CA) — basically describing the agents as ‘rational’ actors — and a set of auxiliary assumptions (AA). Together CA and AA make up what might be called the ‘ur-model’ (M) of all mainstream economic models. Based on these two sets of assumptions, they try to explain and predict both individual (micro) and — most importantly — social phenomena (macro).

The core assumptions typically consist of:

CA1 Completeness — rational actors are able to compare different alternatives and decide which one(s) he prefers

CA2 Transitivity — if the actor prefers A to B, and B to C, he must also prefer A to C.

CA3 Non-satiation — more is preferred to less.

CA4 Maximizing expected utility — in choice situations under risk (calculable uncertainty) the actor maximizes expected utility.

CA4 Consistent efficiency equilibria — the actions of different individuals are consistent, and the interaction between them results in an equilibrium.

When describing the actors as rational in these models, the concept of rationality used is instrumental rationality – choosing consistently the preferred alternative, which is judged to have the best consequences for the actor given his in the model exogenously given wishes/interests/goals. How these preferences/wishes/interests/goals are formed is typically not considered to be within the realm of rationality, and a fortiori not constituting part of economics proper.

The picture given by this set of core assumptions (rational choice) is a rational agent with strong cognitive capacity that knows what alternatives he is facing, evaluates them carefully, calculates the consequences and chooses the one — given his preferences — that he believes has the best consequences according to him.

Weighing the different alternatives against each other, the actor makes a consistent optimizing (typically described as maximizing some kind of utility function) choice and acts accordingly.

Besides the core assumptions (CA) the model also typically has a set of auxiliary assumptions (AA) spatio-temporally specifying the kind of social interaction between ‘rational actors’ that take place in the model. These assumptions can be seen as giving answers to questions such as

AA1 who are the actors and where and when do they act

AA2 which specific goals do they have

AA3 what are their interests

AA4 what kind of expectations do they have

AA5 what are their feasible actions

AA6 what kind of agreements (contracts) can they enter into

AA7 how much and what kind of information do they possess

AA8 how do the actions of the different individuals/agents interact with each other

So, the ur-model of all economic models basically consists of a general specification of what (axiomatically) constitutes optimizing rational agents and a more specific description of the kind of situations in which these rational actors act (making AA serves as a kind of specification/restriction of the intended domain of application for CA and its deductively derived theorems). The list of assumptions can never be complete since there will always unspecified background assumptions and some (often) silent omissions (like closure, transaction costs, etc., regularly based on some negligibility and applicability considerations). The hope, however, is that the ‘thin’ list of assumptions shall be sufficient to explain and predict ‘thick’ phenomena in the real, complex, world.

In some (textbook) model depictions, we are essentially given the following structure,

A1, A2, … An

———————-

Theorem,

where a set of undifferentiated assumptions are used to infer a theorem.

This is, however, too vague and imprecise to be helpful, and does not give a true picture of the usual mainstream modelling strategy, where there’s a differentiation between a set of law-like hypotheses (CA) and a set of auxiliary assumptions (AA), giving the more adequate structure

CA1, CA2, … CAn & AA1, AA2, … AAn

———————————————–

Theorem

or,

CA1, CA2, … CAn

———————-

(AA1, AA2, … AAn) → Theorem,

more clearly underlining the function of AA as a set of (empirical, spatio-temporal) restrictions on the applicability of the deduced theorems.

This underlines the problem noted earlier about Galilean experiments and the restricted reach of the fundamental principles (core assumptions) — specification of AA restricts the range of applicability of the deduced theorem and so makes conclusions made exceedingly difficult to export. In extreme cases, we get

CA1, CA2, … CAn

———————

Theorem,

where the deduced theorems are analytical entities with universal and totally unrestricted applicability, or

AA1, AA2, … AAn

———————-

Theorem,

where the deduced theorem is transformed into an untestable tautological thought-experiment without any empirical commitment whatsoever beyond telling a coherent fictitious as-if story.

Not clearly differentiating between CA and AA means that we can’t make this all-important interpretative distinction and opens up for unwarrantedly ‘saving’ or ‘immunizing’ models from almost any kind of critique by simple equivocation between interpreting models as empirically empty and purely deductive-axiomatic analytical systems, or, respectively, as models with explicit empirical aspirations. Flexibility is usually something people deem positive, but in this methodological context, it’s more troublesome than a sign of real strength. Models that are compatible with everything, or come with unspecified domains of application, are worthless from a scientific point of view.

Mainstream ‘as if’ models are based on the logic of idealization and a set of tight axiomatic and ‘structural’ assumptions from which consistent and precise inferences are made. The beauty of this procedure is, of course, that if the assumptions are true, the conclusions necessarily follow. But it is a poor guide for real-world systems. As Hans Albert has it on this ‘style of thought’:

A theory is scientifically relevant first of all because of its possible explanatory power, its performance, which is coupled with its informational content …

Clearly, it is possible to interpret the ‘presuppositions’ of a theoretical system … not as hypotheses, but simply as limitations to the area of application of the system in question. Since a relationship to reality is usually ensured by the language used in economic statements, in this case the impression is generated that a content-laden statement about reality is being made, although the system is fully immunized and thus without content. In my view that is often a source of self-deception in pure economic thought …

The way axioms and theorems are formulated in mainstream economics often leaves their specification without almost any restrictions whatsoever, safely making every imaginable evidence compatible with the all-embracing ‘theory’ — and theory without informational content never risks being empirically tested and found falsified. Used in mainstream ‘thought experimental’ activities, it may, of course, be very ‘handy’, but totally void of any empirical value.