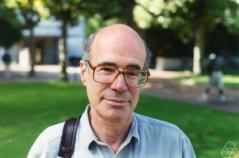

From Lars Syll Mathematical statistician David Freedman‘s Statistical Models and Causal Inference (Cambridge University Press, 2010) and Statistical Models: Theory and Practice (Cambridge University Press, 2009) are marvellous books. They ought to be mandatory reading for every serious social scientist — including economists and econometricians — who doesn’t want to succumb to ad hocassumptions and unsupported statistical conclusions! How do we calibrate the uncertainty introduced by data collection? Nowadays, this question has become quite salient, and it is routinely answered using wellknown methods of statistical inference, with standard errors, t -tests, and P-values … These conventional answers, however, turn out to depend critically on certain rather restrictive assumptions,

Topics:

Lars Pålsson Syll considers the following as important: Uncategorized

This could be interesting, too:

tom writes The Ukraine war and Europe’s deepening march of folly

Stavros Mavroudeas writes CfP of Marxist Macroeconomic Modelling workgroup – 18th WAPE Forum, Istanbul August 6-8, 2025

Lars Pålsson Syll writes The pretence-of-knowledge syndrome

Dean Baker writes Crypto and Donald Trump’s strategic baseball card reserve

from Lars Syll

Mathematical statistician David Freedman‘s Statistical Models and Causal Inference (Cambridge University Press, 2010) and Statistical Models: Theory and Practice (Cambridge University Press, 2009) are marvellous books. They ought to be mandatory reading for every serious social scientist — including economists and econometricians — who doesn’t want to succumb to ad hocassumptions and unsupported statistical conclusions!

Mathematical statistician David Freedman‘s Statistical Models and Causal Inference (Cambridge University Press, 2010) and Statistical Models: Theory and Practice (Cambridge University Press, 2009) are marvellous books. They ought to be mandatory reading for every serious social scientist — including economists and econometricians — who doesn’t want to succumb to ad hocassumptions and unsupported statistical conclusions!

How do we calibrate the uncertainty introduced by data collection? Nowadays, this question has become quite salient, and it is routinely answered using wellknown methods of statistical inference, with standard errors, t -tests, and P-values … These conventional answers, however, turn out to depend critically on certain rather restrictive assumptions, for instance, random sampling …

Thus, investigators who use conventional statistical technique turn out to be making, explicitly or implicitly, quite restrictive behavioral assumptions about their data collection process … More typically, perhaps, the data in hand are simply the data most readily available …

The moment that conventional statistical inferences are made from convenience samples, substantive assumptions are made about how the social world operates … When applied to convenience samples, the random sampling assumption is not a mere technicality or a minor revision on the periphery; the assumption becomes an integral part of the theory …

In particular, regression and its elaborations … are now standard tools of the trade. Although rarely discussed, statistical assumptions have major impacts on analytic results obtained by such methods.

Consider the usual textbook exposition of least squares regression. We have n observational units, indexed by i = 1, . . . , n. There is a response variable yi , conceptualized as μi + i , where μi is the theoretical mean of yi while the disturbances or errors i represent the impact of random variation (sometimes of omitted variables). The errors are assumed to be drawn independently from a common (gaussian) distribution with mean 0 and finite variance. Generally, the error distribution is not empirically identifiable outside the model; so it cannot be studied directly—even in principle—without the model. The error distribution is an imaginary population and the errors i are treated as if they were a random sample from this imaginary population—a research strategy whose frailty was discussed earlier.

Usually, explanatory variables are introduced and μi is hypothesized to be a linear combination of such variables. The assumptions about the μi and i are seldom justified or even made explicit—although minor correlations in the i can create major bias in estimated standard errors for coefficients …

Why do μi and i behave as assumed? To answer this question, investigators would have to consider, much more closely than is commonly done, the connection between social processes and statistical assumptions …

We have tried to demonstrate that statistical inference with convenience samples is a risky business. While there are better and worse ways to proceed with the data at hand, real progress depends on deeper understanding of the data-generation mechanism. In practice, statistical issues and substantive issues overlap. No amount of statistical maneuvering will get very far without some understanding of how the data were produced.

More generally, we are highly suspicious of efforts to develop empirical generalizations from any single dataset. Rather than ask what would happen in principle if the study were repeated, it makes sense to actually repeat the study. Indeed, it is probably impossible to predict the changes attendant on replication without doing replications. Similarly, it may be impossible to predict changes resulting from interventions without actually intervening.