From Lars Syll The point is that a superficial analysis, which only looks at the numbers, without attempting to assess the underlying causal structures, cannot lead to a satisfactory data analysis … We must go out into the real world and look at the structural details of how events occur … The idea that the numbers by themselves can provide us with causal information is false. It is also false that a meaningful analysis of data can be done without taking any stand on the real-world causal mechanism … These issues are of extreme important with reference to Big Data and Machine Learning. Machines cannot expend shoe leather, and enormous amounts of data cannot provide us knowledge of the causal mechanisms in a mechanical way. However, a small amount of knowledge of real-world structures

Topics:

Lars Pålsson Syll considers the following as important: Uncategorized

This could be interesting, too:

tom writes The Ukraine war and Europe’s deepening march of folly

Stavros Mavroudeas writes CfP of Marxist Macroeconomic Modelling workgroup – 18th WAPE Forum, Istanbul August 6-8, 2025

Lars Pålsson Syll writes The pretence-of-knowledge syndrome

Dean Baker writes Crypto and Donald Trump’s strategic baseball card reserve

from Lars Syll

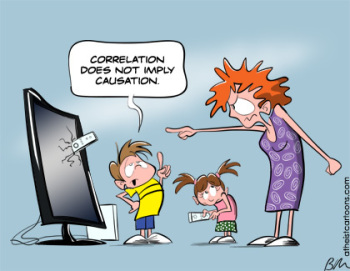

The point is that a superficial analysis, which only looks at the numbers, without attempting to assess the underlying causal structures, cannot lead to a satisfactory data analysis … We must go out into the real world and look at the structural details of how events occur … The idea that the numbers by themselves can provide us with causal information is false. It is also false that a meaningful analysis of data can be done without taking any stand on the real-world causal mechanism … These issues are of extreme important with reference to Big Data and Machine Learning. Machines cannot expend shoe leather, and enormous amounts of data cannot provide us knowledge of the causal mechanisms in a mechanical way. However, a small amount of knowledge of real-world structures used as causal input can lead to substantial payoffs in terms of meaningful data analysis. The problem with current econometric techniques is that they do not have any scope for input of causal information – the language of econometrics does not have the vocabulary required to talk about causal concepts.

What Asad Zaman tells us in his splendid set of lectures is that causality in social sciences can never solely be a question of statistical inference. Causality entails more than predictability, and to really in depth explain social phenomena require theory. Analysis of variation — the foundation of all econometrics — can never in itself reveal how these variations are brought about. First, when we are able to tie actions, processes or structures to the statistical relations detected, can we say that we are getting at relevant explanations of causation.

Most facts have many different, possible, alternative explanations, but we want to find the best of all contrastive (since all real explanation takes place relative to a set of alternatives) explanations. So which is the best explanation? Many scientists, influenced by statistical reasoning, think that the likeliest explanation is the best explanation. But the likelihood of x is not in itself a strong argument for thinking it explains y. I would rather argue that what makes one explanation better than another are things like aiming for and finding powerful, deep, causal, features and mechanisms that we have warranted and justified reasons to believe in. Statistical — especially the variety based on a Bayesian epistemology — reasoning generally has no room for these kinds of explanatory considerations. The only thing that matters is the probabilistic relation between evidence and hypothesis. That is also one of the main reasons I find abduction — inference to the best explanation — a better description and account of what constitute actual scientific reasoning and inferences.

Most facts have many different, possible, alternative explanations, but we want to find the best of all contrastive (since all real explanation takes place relative to a set of alternatives) explanations. So which is the best explanation? Many scientists, influenced by statistical reasoning, think that the likeliest explanation is the best explanation. But the likelihood of x is not in itself a strong argument for thinking it explains y. I would rather argue that what makes one explanation better than another are things like aiming for and finding powerful, deep, causal, features and mechanisms that we have warranted and justified reasons to believe in. Statistical — especially the variety based on a Bayesian epistemology — reasoning generally has no room for these kinds of explanatory considerations. The only thing that matters is the probabilistic relation between evidence and hypothesis. That is also one of the main reasons I find abduction — inference to the best explanation — a better description and account of what constitute actual scientific reasoning and inferences.

Some statisticians and data scientists think that algorithmic formalisms somehow give them access to causality. That is, however, simply not true. Assuming ‘convenient’ things like faithfulness or stability is not to give proofs. It’s to assume what has to be proven. Deductive-axiomatic methods used in statistics do no produce evidence for causal inferences. The real causality we are searching for is the one existing in the real world around us. If there is no warranted connection between axiomatically derived theorems and the real-world, well, then we haven’t really obtained the causation we are looking for.