From Lars Syll In 2016, Vox sent out a survey to more than 200 scientists, asking, “If you could change one thing about how science works today, what would it be and why?” One of the clear themes in the responses: The institutions of science need to get better at rewarding failure. One young scientist told us, “I feel torn between asking questions that I know will lead to statistical significance and asking questions that matter.” The biggest problem in science isn’t statistical significance. It’s the culture. She felt torn because young scientists need publications to get jobs. Under the status quo, in order to get publications, you need statistically significant results. Statistical significance alone didn’t lead to the replication crisis. The institutions of science incentivized the

Topics:

Lars Pålsson Syll considers the following as important: Uncategorized

This could be interesting, too:

tom writes The Ukraine war and Europe’s deepening march of folly

Stavros Mavroudeas writes CfP of Marxist Macroeconomic Modelling workgroup – 18th WAPE Forum, Istanbul August 6-8, 2025

Lars Pålsson Syll writes The pretence-of-knowledge syndrome

Dean Baker writes Crypto and Donald Trump’s strategic baseball card reserve

from Lars Syll

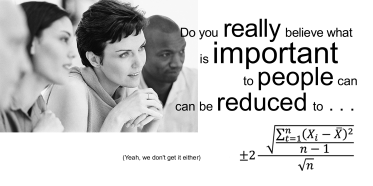

In 2016, Vox sent out a survey to more than 200 scientists, asking, “If you could change one thing about how science works today, what would it be and why?” One of the clear themes in the responses: The institutions of science need to get better at rewarding failure.

One young scientist told us, “I feel torn between asking questions that I know will lead to statistical significance and asking questions that matter.”

The biggest problem in science isn’t statistical significance. It’s the culture. She felt torn because young scientists need publications to get jobs. Under the status quo, in order to get publications, you need statistically significant results. Statistical significance alone didn’t lead to the replication crisis. The institutions of science incentivized the behaviors that allowed it to fester.

As shown over and over again when significance tests are applied, people have a tendency to read ‘not disconfirmed’ as ‘probably confirmed.’ Standard scientific methodology tells us that when there is only say a 5 % probability that pure sampling error could account for the observed difference between the data and the null hypothesis, it would be more ‘reasonable’ to conclude that we have a case of disconfirmation. Especially if we perform many independent tests of our hypothesis and they all give about the same 5 % result as our reported one, I guess most researchers would count the hypothesis as even more disconfirmed.

As shown over and over again when significance tests are applied, people have a tendency to read ‘not disconfirmed’ as ‘probably confirmed.’ Standard scientific methodology tells us that when there is only say a 5 % probability that pure sampling error could account for the observed difference between the data and the null hypothesis, it would be more ‘reasonable’ to conclude that we have a case of disconfirmation. Especially if we perform many independent tests of our hypothesis and they all give about the same 5 % result as our reported one, I guess most researchers would count the hypothesis as even more disconfirmed.

We should never forget that the underlying parameters we use when performing significance tests are model constructions. Our p-values mean nothing if the model is wrong. And most importantly — statistical significance tests DO NOT validate models!

In journal articles a typical regression equation will have an intercept and several explanatory variables. The regression output will usually include an F-test, with p – 1 degrees of freedom in the numerator and n – p in the denominator. The null hypothesis will not be stated. The missing null hypothesis is that all the coefficients vanish, except the intercept.

If F is significant, that is often thought to validate the model. Mistake. The F-test takes the model as given. Significance only means this: if the model is right and the coefficients are 0, it is very unlikely to get such a big F-statistic. Logically, there are three possibilities on the table:

i) An unlikely event occurred.

ii) Or the model is right and some of the coefficients differ from 0.

iii) Or the model is wrong.

So?