Long-time commentator Ken B has drawn my attention to this post by Gene Callahan that complains that the Turing Test doesn’t really test for real intelligence.In essence, I actually agree on this point, though I would reject Callahan’s dualism.The endless debates about whether machines or software have real intelligence generally tend to suffer from a shoddy fallacy of equivocation. Generally, people who want to defend the real intelligence of machines make arguments like this: (1) machines have intelligence and can think.(2) a Turing test can show whether a machine has intelligence and can think.(3) the brain is just an information processing machine, and so since computers are also information processing machines, a sufficiently complex computer should eventually have intelligence. Yes, but what do you mean by “intelligence” and “thinking” in (1)?Do you mean the conscious intelligence and thinking that I am experiencing right now? Actually, there is no good reason to think software can think in this way, because (3) is unproven and a non sequitur.It does not follow that the consciousness of the human mind is just information processing that can be reproduced in other synthetic materials, such as in silicon chips in digital computers. After all, DNA and its behaviour exhibit a type of information processing and storage as well, but DNA is not conscious.

Topics:

Lord Keynes considers the following as important: artificial intelligence, Human Consciousness, Popper’s Three World Ontology

This could be interesting, too:

Peter Radford writes Weekend read – An ignorance of merit … I am confused

Robert Skidelsky writes Presentation for the Miami Book Fair – Mindless

Robert Skidelsky writes Will Artificial Intelligence replace us? – The Article Interview

Joel Eissenberg writes Is it live or is it ChatGPT?

In essence, I actually agree on this point, though I would reject Callahan’s dualism.

The endless debates about whether machines or software have real intelligence generally tend to suffer from a shoddy fallacy of equivocation. Generally, people who want to defend the real intelligence of machines make arguments like this:

Yes, but what do you mean by “intelligence” and “thinking” in (1)?(1) machines have intelligence and can think.

(2) a Turing test can show whether a machine has intelligence and can think.

(3) the brain is just an information processing machine, and so since computers are also information processing machines, a sufficiently complex computer should eventually have intelligence.

Do you mean the conscious intelligence and thinking that I am experiencing right now? Actually, there is no good reason to think software can think in this way, because (3) is unproven and a non sequitur.

It does not follow that the consciousness of the human mind is just information processing that can be reproduced in other synthetic materials, such as in silicon chips in digital computers. After all, DNA and its behaviour exhibit a type of information processing and storage as well, but DNA is not conscious.

The conscious thinking intelligence that humans have is most probably a higher-level emergent property from specific biological processes in the brain.

As the analytic philosopher John Searle points out in the video below, all the empirical evidence suggests that consciousness is a biological phenomenon in the brain causally dependent on neuronal processes and biochemical activity, but one that can be explained by physicalist science, not some discredited supernatural ideas about souls or Cartesian dualism (see also Searle 1990; Searle 2002; Searle 1992).

Now the crude behaviourist Turing test does not test for whether an entity has conscious life, but merely whether it simulates human intelligence. Thus even if future computers all start passing Turing tests, it is not going to be some shocking milestone in human history: all it will show is that software programs have become sophisticated enough to fool us into thinking machines have conscious minds as we do, even though they do not.

Searle’s famous Chinese room argument is a devastating blow to the cult of AI.

But there are some additional philosophical points here. There is nothing supernatural about saying that, physically speaking, you need brain chemistry to produce a conscious intelligent mind.

And, philosophically speaking, the key to cracking the problem of human conscious intelligence is Karl Popper’s pluralist ontology. This is not a supernaturalist ontology, but naturalistic.

The key to understanding complexity in our universe is this: emergent properties. Karl Popper’s Three World Ontology gives us the best conceptual framework in which to understand our universe and the human mind.

Now this is where Searle goes philosophically wrong: his adherence to a crude monist materialism. Searle is unwilling to accept that (1) some emergent properties – like the human mind – are so remarkable that they make a new ontological category, and (2), because of (1), we have good reason to consider Popper’s three worlds ontology as a realistic metaphysics.

So what is Karl Popper’s pluralist ontology?

Karl Popper’s Three Worlds system is a pluralist ontology that classifies all objects in our universe, even though it is quite different from dualism. Ultimately, all objects in Popper’s world are causally dependent on matter and energy and their relations and permutations, so that – despite being ontologically pluralist – it has a fundamentally naturalist and physicalist/materialist basis.

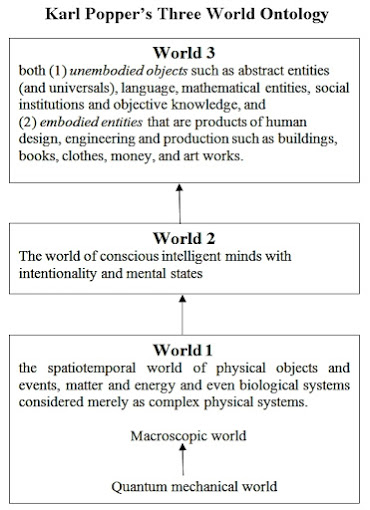

We can set out the Three World Ontology in the following diagram.

World 1 give rises to World 2 objects, and World 2 in turn gives rise to World 3 objects, World 2 and World 3 being emergent properties from the each lower-level world respectively.

These three worlds can be described as follows:

The conscious intelligent human mind is an emergent property from World 1 brain states, but is so powerfully unique it requires a new ontological category.World 1: the fundamental world of spatiotemporal physical objects and events, matter and energy and even biological systems considered merely as only complex physical systems. It further divided into a fundamental distinction between:

In some manner that is not properly understood our macroscopic world is an emergent property from the world of quantum mechanics.(1) the world of quantum mechanics, and

(2) the macroscopic world of Newtonian physics and Einstein’s special and general theories of relativity.

World 2: the world of conscious intelligent human minds with intentionality and mental states (and, for example, any alien minds as complex as ours that have evolved on other planets, if they exist). True conscious intelligence is a property of minds in this world.

World 3: both

We must remember that “embodied entities” are members of both World 3 and World 1, but in different senses: e.g., considered purely physically as spatiotemporal objects describable in scientific terms, they belong to World 1; but when considered as human cultural artefacts, they belong to World 3.(1) unembodied objects such as abstract entities (and, most probably, universals), mathematical entities, social institutions and objective knowledge, and

(2) embodied entities that are products of human design, engineering and production such as buildings, books, clothes, money, and art works considered as human objects.

A true artificial intelligence that would have the same type of conscious intelligent mind as the human mind will have to directly reproduce or replicate the biological processes in the brain that cause consciousness. An “artificial” intelligence – in the sense of not being a normal human being – will probably need to have organic or biochemical structures in its “brain” in order for it to be fully and truly conscious.

Such entities, if they were fully conscious, would create all sorts of ethical issues. They would probably have to be imbued with moral/ethical principles as humans are, for example. Probably they would have to be granted some kind of human rights at some point, so that we could not treat them as slaves. And what would they do? What work would they perform?

The whole notion that your desktop computer could become as conscious as you are should be a horrifying thought. Wouldn’t it effectively be a slave?

At any rate, the whole issue of whether truly conscious artificial intelligence can be created is a matter for a future science that has first completely mastered what human (and higher animal) consciousness actually is.

To return to the issue of ontology, Popper summed up his system as follows:

At any rate, Popper’s three world ontology has the following virtues:“To sum up, we arrive at the following picture of the universe.

There is the physical universe, world 1, with its most important sub-universe, that of the living organisms.

World 2, the world of conscious experience, emerges as an evolutionary product from the world of organisms.

World 3, the world of the products of the human mind, emerges as an evolutionary product from world 2.

In each of these cases, the emerging product has a tremendous feedback effect upon the world from which it emerged. For example, the physico-chemical composition of our atmosphere which contains so much oxygen is a product of life – a feedback effect of the life of plants. And, especially, the emergence of world 3 has a tremendous feedback effect upon world 2 and, through its intervention, upon world 1.

The feedback effect between world 3 and world 2 is of particular importance. Our minds are the creators of world 3; but world 3 in its turn not only informs our minds, but largely creates them. The very idea of a self depends on world 3 theories, especially upon a theory of time which underlies the identity of the self, the self of yesterday, of today, and of tomorrow. The learning of a language, which is a world 3 object, is itself partly a creative act and partly a feedback effect; and the full consciousness of self is anchored in our human language.

Our relationship to our work is a feedback relationship: our work grows through us, and we grow through our work.

This growth, this self-transcendence, has a rational side and a non-rational side. The creation of new ideas, of new theories, is partly non-rational. It is a matter of what is called ‘intuition’ or ‘imagination’. But intuition is fallible, as is everything human. Intuition must be controlled through rational criticism, which is the most important product of human language. This control through criticism is the rational aspect of the growth of knowledge and of our personal growth. It is one of the three most important things that make us human. The other two are compassion, and the consciousness of our fallibility.” (Popper 1978: 166–167).

Jesper Jespersen (2009) uses Popper’s three worlds ontology in his Critical Realist methodology for Post Keynesian economics, and it is highly relevant for the ontological and epistemological basis of economics.(1) it avoids the fallacy of strong reductionism;

(2) it is compatible with a moderate realist or conceptualist view of abstract entities and universals;

(3) it understands that human minds are an important, real entity in the universe and is consistent with John Searle’s biological naturalist theory of the mind, even though minds are a type of emergent physical property (for why Searle is not a property dualist, see Searle 2002);

(4) it is compatible with the finding of modern science that emergent properties are a fundamental phenomenon in our universe;

(5) it is compatible with downwards causation;

(6) it is ultimately consistent with the view that higher-level worlds are causally dependent for their existence on lower-level worlds.

Further Reading

“More on Karl Popper’s Three World Ontology,” September 6, 2013.

“Karl Popper’s Three World Ontology,” September 4, 2013.

“Limits of Artificial Intelligence,” February 24, 2014.

BIBLIOGRAPHY

Jespersen, Jesper. 2009. Macroeconomic Methodology: A Post-Keynesian Perspective. Edward Elgar, Cheltenham, Glos. and Northampton, MA.

Popper, Karl R. 1978. “Three Worlds,” The Tanner Lecture on Human Values, Delivered at the University of Michigan, April 7, 1978.

www.thee-online.com/Documents/Popper-3Worlds.pdf

Searle, John. R. 1980. “Minds, Brains, and Programs,” Behavioral and Brain Sciences 3: 417–424.

Searle, John. R. 1980. “Intrinsic Intentionality,” Behavioral and Brain Sciences 3: 450–456.

Searle, John R. 1982. “The Chinese Room Revisited,” The Behavioral and Brain Sciences 5: 345–348.

Searle, John. R. 1990. “Is the Brain a Digital Computer?,” Proceedings and Addresses of the American Philosophical Association 64: 21–37.

Searle, John R. 1992. The Rediscovery of the Mind. MIT Press, Cambridge, Mass and London.

Searle, J. 2002. “Why I Am Not a Property Dualist,” Journal of Consciousness Studies 9.12: 57–64.