How scientists manipulate research [embedded content]All science entails human judgment, and using statistical models doesn’t relieve us of that necessity. Working with misspecified models, the scientific value of significance testing is actually zero — even though you’re making valid statistical inferences! Statistical models and concomitant significance tests are no substitutes for doing real science. In its standard form, a significance test is not the kind of ‘severe test’ that we are looking for in our search for being able to confirm or disconfirm empirical scientific hypotheses. This is problematic for many reasons, one being that there is a strong tendency to accept the null hypothesis since they can’t be rejected at the standard 5% significance

Topics:

Lars Pålsson Syll considers the following as important: Statistics & Econometrics

This could be interesting, too:

Lars Pålsson Syll writes Keynes’ critique of econometrics is still valid

Lars Pålsson Syll writes The history of random walks

Lars Pålsson Syll writes The history of econometrics

Lars Pålsson Syll writes What statistics teachers get wrong!

How scientists manipulate research

All science entails human judgment, and using statistical models doesn’t relieve us of that necessity. Working with misspecified models, the scientific value of significance testing is actually zero — even though you’re making valid statistical inferences! Statistical models and concomitant significance tests are no substitutes for doing real science.

In its standard form, a significance test is not the kind of ‘severe test’ that we are looking for in our search for being able to confirm or disconfirm empirical scientific hypotheses. This is problematic for many reasons, one being that there is a strong tendency to accept the null hypothesis since they can’t be rejected at the standard 5% significance level. In their standard form, significance tests bias against new hypotheses by making it hard to disconfirm the null hypothesis.

And as shown over and over again when it is applied, people have a tendency to read “not disconfirmed” as “probably confirmed.” Standard scientific methodology tells us that when there is only say a 10 % probability that pure sampling error could account for the observed difference between the data and the null hypothesis, it would be more “reasonable” to conclude that we have a case of disconfirmation. Especially if we perform many independent tests of our hypothesis and they all give the same 10% result as our reported one, I guess most researchers would count the hypothesis as even more disconfirmed.

Statistics is no substitute for thinking. We should never forget that the underlying parameters we use when performing significance tests are model constructions. Our p-values mean next to nothing if the model is wrong. Statistical significance tests do not validate models!

In many social sciences, p-values and null hypothesis significance testing (NHST) is often used to draw far-reaching scientific conclusions — despite the fact that they are as a rule poorly understood and that there exist alternatives that are easier to understand and more informative.

In many social sciences, p-values and null hypothesis significance testing (NHST) is often used to draw far-reaching scientific conclusions — despite the fact that they are as a rule poorly understood and that there exist alternatives that are easier to understand and more informative.

Not the least using confidence intervals (CIs) and effect sizes are to be preferred to the Neyman-Pearson-Fisher mishmash approach that is so often practiced by applied researchers.

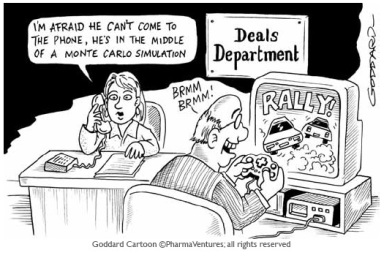

Running a Monte Carlo simulation with 100 replications of a fictitious sample having N = 20, confidence intervals of 95%, a normally distributed population with a mean = 10 and a standard deviation of 20, taking two-tailed p-values on a zero null hypothesis, we get varying CIs (since they are based on varying sample standard deviations), but with a minimum of 3.2 and a maximum of 26.1, we still get a clear picture of what would happen in an infinite limit sequence. On the other hand p-values (even though from a purely mathematical-statistical sense more or less equivalent to CIs) vary strongly from sample to sample, and jumping around between a minimum of 0.007 and a maximum of 0.999 doesn’t give you a clue of what will happen in an infinite limit sequence!

[In case you want to do your own Monte Carlo simulations, here’s an example yours truly made using his favourite econometrics program, Gretl:

nulldata 20

loop 100 –progressive

series y = normal(10,15)

scalar zs = (10-mean(y))/sd(y)

scalar df = $nobs-1

scalar ybar=mean(y)

scalar ysd= sd(y)

scalar ybarsd=ysd/sqrt($nobs)

scalar tstat = (ybar-10)/ybarsd

pvalue t df tstat

scalar lowb = mean(y) – critical(t,df,0.025)*ybarsd

scalar uppb = mean(y) + critical(t,df,0.025)*ybarsd

scalar pval = pvalue(t,df,tstat)

store E:pvalcoeff.gdt lowb uppb pval

endloop]