Bayes theorem — what’s the big deal? The plausibility of your belief depends on the degree to which your belief–and only your belief–explains the evidence for it. The more alternative explanations there are for the evidence, the less plausible your belief is. That, to me, is the essence of Bayes’ theorem. “Alternative explanations” can encompass many things. Your evidence might be erroneous, skewed by a malfunctioning instrument, faulty analysis, confirmation bias, even fraud. Your evidence might be sound but explicable by many beliefs, or hypotheses, other than yours. In other words, there’s nothing magical about Bayes’ theorem. It boils down to the truism that your belief is only as valid as its evidence. If you have good evidence, Bayes’ theorem can yield good results. If your evidence is flimsy, Bayes’ theorem won’t be of much use. Garbage in, garbage out. The potential for Bayes abuse begins with your initial estimate of the probability of your belief, often called the “prior.” … In many cases, estimating the prior is just guesswork, allowing subjective factors to creep into your calculations. You might be guessing the probability of something that–unlike cancer—does not even exist, such as strings, multiverses, inflation or God. You might then cite dubious evidence to support your dubious belief.

Topics:

Lars Pålsson Syll considers the following as important: Theory of Science & Methodology

This could be interesting, too:

Lars Pålsson Syll writes Kausalitet — en crash course

Lars Pålsson Syll writes Randomization and causal claims

Lars Pålsson Syll writes Race and sex as causes

Lars Pålsson Syll writes Randomization — a philosophical device gone astray

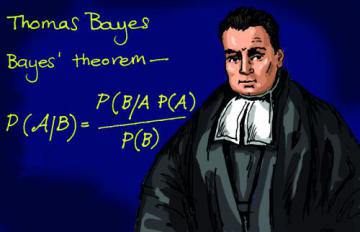

Bayes theorem — what’s the big deal?

The plausibility of your belief depends on the degree to which your belief–and only your belief–explains the evidence for it. The more alternative explanations there are for the evidence, the less plausible your belief is. That, to me, is the essence of Bayes’ theorem.

“Alternative explanations” can encompass many things. Your evidence might be erroneous, skewed by a malfunctioning instrument, faulty analysis, confirmation bias, even fraud. Your evidence might be sound but explicable by many beliefs, or hypotheses, other than yours.

In other words, there’s nothing magical about Bayes’ theorem. It boils down to the truism that your belief is only as valid as its evidence. If you have good evidence, Bayes’ theorem can yield good results. If your evidence is flimsy, Bayes’ theorem won’t be of much use. Garbage in, garbage out.

The potential for Bayes abuse begins with your initial estimate of the probability of your belief, often called the “prior.” …

In many cases, estimating the prior is just guesswork, allowing subjective factors to creep into your calculations. You might be guessing the probability of something that–unlike cancer—does not even exist, such as strings, multiverses, inflation or God. You might then cite dubious evidence to support your dubious belief. In this way, Bayes’ theorem can promote pseudoscience and superstition as well as reason.

Embedded in Bayes’ theorem is a moral message: If you aren’t scrupulous in seeking alternative explanations for your evidence, the evidence will just confirm what you already believe. Scientists often fail to heed this dictum, which helps explains why so many scientific claims turn out to be erroneous. Bayesians claim that their methods can help scientists overcome confirmation bias and produce more reliable results, but I have my doubts.

And as I mentioned above, some string and multiverse enthusiasts are embracing Bayesian analysis. Why? Because the enthusiasts are tired of hearing that string and multiverse theories are unfalsifiable and hence unscientific, and Bayes’ theorem allows them to present the theories in a more favorable light. In this case, Bayes’ theorem, far from counteracting confirmation bias, enables it.

One of yours truly’s favourite ‘problem situating lecture arguments’ against Bayesianism goes something like this: Assume you’re a Bayesian turkey and hold a nonzero probability belief in the hypothesis H that “people are nice vegetarians that do not eat turkeys and that every day I see the sun rise confirms my belief.” For every day you survive, you update your belief according to Bayes’ Rule

P(H|e) = [P(e|H)P(H)]/P(e),

where evidence e stands for “not being eaten” and P(e|H) = 1. Given that there do exist other hypotheses than H, P(e) is less than 1 and a fortiori P(H|e) is greater than P(H). Every day you survive increases your probability belief that you will not be eaten. This is totally rational according to the Bayesian definition of rationality. Unfortunately — as Bertrand Russell famously noticed — for every day that goes by, the traditional Christmas dinner also gets closer and closer …

Neoclassical economics nowadays usually assumes that agents that have to make choices under conditions of uncertainty behave according to Bayesian rules (preferably the ones axiomatized by Ramsey (1931), de Finetti (1937) or Savage (1954)) – that is, they maximize expected utility with respect to some subjective probability measure that is continually updated according to Bayes theorem. If not, they are supposed to be irrational, and ultimately – via some “Dutch book” or “money pump” argument – susceptible to being ruined by some clever “bookie”.

Bayesianism reduces questions of rationality to questions of internal consistency (coherence) of beliefs, but – even granted this questionable reductionism – do rational agents really have to be Bayesian? As I have been arguing repeatedly over the years, there is no strong warrant for believing so.

Bayesianism reduces questions of rationality to questions of internal consistency (coherence) of beliefs, but – even granted this questionable reductionism – do rational agents really have to be Bayesian? As I have been arguing repeatedly over the years, there is no strong warrant for believing so.

In many of the situations that are relevant to economics one could argue that there is simply not enough of adequate and relevant information to ground beliefs of a probabilistic kind, and that in those situations it is not really possible, in any relevant way, to represent an individual’s beliefs in a single probability measure.

Say you have come to learn (based on own experience and tons of data) that the probability of you becoming unemployed in the US is 10%. Having moved to another country (where you have no own experience and no data) you have no information on unemployment and a fortiori nothing to help you construct any probability estimate on. A Bayesian would, however, argue that you would have to assign probabilities to the mutually exclusive alternative outcomes and that these have to add up to 1, if you are rational. That is, in this case – and based on symmetry – a rational individual would have to assign probability 10% to becoming unemployed and 90% of becoming employed.

That feels intuitively wrong though, and I guess most people would agree. Bayesianism cannot distinguish between symmetry-based probabilities from information and symmetry-based probabilities from an absence of information. In these kinds of situations most of us would rather say that it is simply irrational to be a Bayesian and better instead to admit that we “simply do not know” or that we feel ambiguous and undecided. Arbitrary an ungrounded probability claims are more irrational than being undecided in face of genuine uncertainty, so if there is not sufficient information to ground a probability distribution it is better to acknowledge that simpliciter, rather than pretending to possess a certitude that we simply do not possess.

That feels intuitively wrong though, and I guess most people would agree. Bayesianism cannot distinguish between symmetry-based probabilities from information and symmetry-based probabilities from an absence of information. In these kinds of situations most of us would rather say that it is simply irrational to be a Bayesian and better instead to admit that we “simply do not know” or that we feel ambiguous and undecided. Arbitrary an ungrounded probability claims are more irrational than being undecided in face of genuine uncertainty, so if there is not sufficient information to ground a probability distribution it is better to acknowledge that simpliciter, rather than pretending to possess a certitude that we simply do not possess.

I think this critique of Bayesianism is in accordance with the views of Keynes’ A Treatise on Probability (1921) and General Theory (1937). According to Keynes we live in a world permeated by unmeasurable uncertainty – not quantifiable stochastic risk – which often forces us to make decisions based on anything but rational expectations. Sometimes we “simply do not know.” Keynes would not have accepted the view of Bayesian economists, according to whom expectations “tend to be distributed, for the same information set, about the prediction of the theory.” Keynes, rather, thinks that we base our expectations on the confidence or “weight” we put on different events and alternatives. To Keynes expectations are a question of weighing probabilities by “degrees of belief”, beliefs that have preciously little to do with the kind of stochastic probabilistic calculations made by the rational agents modeled by Bayesian economists.

The bias toward the superficial and the response to extraneous influences on research are both examples of real harm done in contemporary social science by a roughly Bayesian paradigm of statistical inference as the epitome of empirical argument. For instance the dominant attitude toward the sources of black-white differential in United States unemployment rates (routinely the rates are in a two to one ratio) is “phenomenological.” The employment differences are traced to correlates in education, locale, occupational structure, and family background. The attitude toward further, underlying causes of those correlations is agnostic … Yet on reflection, common sense dictates that racist attitudes and institutional racism must play an important causal role. People do have beliefs that blacks are inferior in intelligence and morality, and they are surely influenced by these beliefs in hiring decisions … Thus, an overemphasis on Bayesian success in statistical inference discourages the elaboration of a type of account of racial disadavantages that almost certainly provides a large part of their explanation.