The logical fallacy that good science builds on In economics most models and theories build on a kind of argumentation pattern that looks like this: Premise 1: All Chicago economists believe in REH Premise 2: Robert Lucas is a Chicago economist —————————————————————– Conclusion: Robert Lucas believes in REH Among philosophers of science this is treated as an example of a logically valid deductive inference (and, following Quine, whenever logic is used in this post, ‘logic’ refers to deductive/analytical logic). In a hypothetico-deductive reasoning we would use the conclusion to test the law-like hypothesis in premise 1 (according to the hypothetico-deductive model, a hypothesis is confirmed by evidence if the evidence is deducible from the hypothesis). If Robert Lucas does not believe in REH we have gained some warranted reason for non-acceptance of the hypothesis (an obvious shortcoming here being that further information beyond that given in the explicit premises might have given another conclusion).

Topics:

Lars Pålsson Syll considers the following as important: Economics, Theory of Science & Methodology

This could be interesting, too:

Lars Pålsson Syll writes Schuldenbremse bye bye

Lars Pålsson Syll writes What’s wrong with economics — a primer

Lars Pålsson Syll writes Krigskeynesianismens återkomst

Lars Pålsson Syll writes Finding Eigenvalues and Eigenvectors (student stuff)

The logical fallacy that good science builds on

In economics most models and theories build on a kind of argumentation pattern that looks like this:

Premise 1: All Chicago economists believe in REH

Premise 2: Robert Lucas is a Chicago economist

—————————————————————–

Conclusion: Robert Lucas believes in REH

Among philosophers of science this is treated as an example of a logically valid deductive inference (and, following Quine, whenever logic is used in this post, ‘logic’ refers to deductive/analytical logic).

In a hypothetico-deductive reasoning we would use the conclusion to test the law-like hypothesis in premise 1 (according to the hypothetico-deductive model, a hypothesis is confirmed by evidence if the evidence is deducible from the hypothesis). If Robert Lucas does not believe in REH we have gained some warranted reason for non-acceptance of the hypothesis (an obvious shortcoming here being that further information beyond that given in the explicit premises might have given another conclusion).

The hypothetico-deductive method (in case we treat the hypothesis as absolutely sure/true, we rather talk of an axiomatic-deductive method) basically means that we

•Posit a hypothesis

•Infer empirically testable propositions (consequences) from it

•Test the propositions through observation or experiment

•Depending on the testing results either find the hypothesis corroborated or falsified.

However, in science we regularly use a kind of ‘practical’ argumentation where there is little room for applying the restricted logical ‘formal transformations’ view of validity and inference. Most people would probably accept the following argument as a ‘valid’ reasoning even though it from a strictly logical point of view is non-valid:

Premise 1: Robert Lucas is a Chicago economist

Premise 2: The recorded proportion of Keynesian Chicago economists is zero

————————————————————————–

Conclusion: So, certainly, Robert Lucas is not a Keynesian economist

How come? Well I guess one reason is that in science, contrary to what you find in most logic text-books, not very many argumentations are settled by showing that ‘All Xs are Ys.’ In scientific practice we instead present other-than-analytical explicit warrants and backings — data, experience, evidence, theories, models — for our inferences. As long as we can show that our ‘deductions’ or ‘inferences’ are justifiable and have well-backed warrants our colleagues listen to us. That our scientific ‘deductions’ or ‘inferences’ are logical non-entailments simply is not a problem. To think otherwise is committing the fallacy of misapplying formal-analytical logic categories to areas where they are pretty much irrelevant or simply beside the point.

Scientific arguments are not analytical arguments, where validity is solely a question of formal properties. Scientific arguments are substantial arguments. If Robert Lucas is a Keynesian or not, is nothing we can decide on formal properties of statements/propositions. We have to check out what the guy has actually been writing and saying to check if the hypothesis that he is a Keynesian is true or not.

Deductive logic may work well — given that it is used in deterministic closed models! In mathematics, the deductive-axiomatic method has worked just fine. But science is not mathematics. Conflating those two domains of knowledge has been one of the most fundamental mistakes made in modern economics. Applying it to real-world open systems immediately proves it to be excessively narrow and hopelessly irrelevant. Both the confirmatory and explanatory ilk of hypothetico-deductive reasoning fails since there is no way you can relevantly analyze confirmation or explanation as a purely logical relation between hypothesis and evidence or between law-like rules and explananda. In science we argue and try to substantiate our beliefs and hypotheses with reliable evidence — propositional and predicate deductive logic, on the other hand, is not about reliability, but the validity of the conclusions given that the premises are true.

Deduction — and the inferences that goes with it — is an example of ‘explicative reasoning,’ where the conclusions we make are already included in the premises. Deductive inferences are purely analytical and it is this truth-preserving nature of deduction that makes it different from all other kinds of reasoning. But it is also its limitation, since truth in the deductive context does not refer to a real world ontology (only relating propositions as true or false within a formal-logic system) and as an argument scheme is totally non-ampliative — the output of the analysis is nothing else than the input.

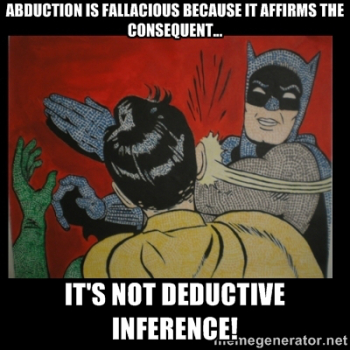

In science we standardly use a logically non-valid inference — the fallacy of affirming the consequent — of the following form:

(1) p => q

(2) q

————-

p

or, in instantiated form

(1) ∀x (Gx => Px)

(2) Pa

————

Ga

Although logically invalid, it is nonetheless a kind of inference — abduction — that may be strongly warranted and truth-producing.

Following the general pattern ‘Evidence => Explanation => Inference’ we infer something based on what would be the best explanation given the law-like rule (premise 1) and an observation (premise 2). The truth of the conclusion (explanation) is nothing that is logically given, but something we have to justify, argue for, and test in different ways to possibly establish with any certainty or degree. And as always when we deal with explanations, what is considered best is relative to what we know of the world. In the real world all evidence has an irreducible holistic aspect. We never conclude that evidence follows from a hypothesis simpliciter, but always given some more or less explicitly stated contextual background assumptions. All non-deductive inferences and explanations are a fortiori context-dependent.

Following the general pattern ‘Evidence => Explanation => Inference’ we infer something based on what would be the best explanation given the law-like rule (premise 1) and an observation (premise 2). The truth of the conclusion (explanation) is nothing that is logically given, but something we have to justify, argue for, and test in different ways to possibly establish with any certainty or degree. And as always when we deal with explanations, what is considered best is relative to what we know of the world. In the real world all evidence has an irreducible holistic aspect. We never conclude that evidence follows from a hypothesis simpliciter, but always given some more or less explicitly stated contextual background assumptions. All non-deductive inferences and explanations are a fortiori context-dependent.

If we extend the abductive scheme to incorporate the demand that the explanation has to be the best among a set of plausible competing/rival/contrasting potential and satisfactory explanations, we have what is nowadays usually referred to as inference to the best explanation.

In inference to the best explanation we start with a body of (purported) data/facts/evidence and search for explanations that can account for these data/facts/evidence. Having the best explanation means that you, given the context-dependent background assumptions, have a satisfactory explanation that can explain the fact/evidence better than any other competing explanation — and so it is reasonable to consider/believe the hypothesis to be true. Even if we (inevitably) do not have deductive certainty, our reasoning gives us a license to consider our belief in the hypothesis as reasonable.

Accepting a hypothesis means that you believe it does explain the available evidence better than any other competing hypothesis. Knowing that we — after having earnestly considered and analysed the other available potential explanations — have been able to eliminate the competing potential explanations, warrants and enhances the confidence we have that our preferred explanation is the best explanation, i. e., the explanation that provides us (given it is true) with the greatest understanding.

This, of course, does not in any way mean that we cannot be wrong. Of course we can. Inferences to the best explanation are fallible inferences — since the premises do not logically entail the conclusion — so from a logical point of view, inference to the best explanation is a weak mode of inference. But if the arguments put forward are strong enough, they can be warranted and give us justified true belief, and hence, knowledge, even though they are fallible inferences. As scientists we sometimes — much like Sherlock Holmes and other detectives that use inference to the best explanation reasoning — experience disillusion. We thought that we had reached a strong conclusion by ruling out the alternatives in the set of contrasting explanations. But — what we thought was true turned out to be false.

That does not necessarily mean that we had no good reasons for believing what we believed. If we cannot live with that contingency and uncertainty, well, then we are in the wrong business. If it is deductive certainty you are after, rather than the ampliative and defeasible reasoning in inference to the best explanation — well, then get in to math or logic, not science.

For realists, the name of the scientific game is explaining phenomena … Realists typically invoke ‘inference to the best explanation’ or IBE … What exactly is the inference in IBE, what are the premises, and what the conclusion? …

It is reasonable to believe that the best available explanation of any fact is true.

F is a fact.

Hypothesis H explains F.

No available competing hypothesis explains F as well as H does.

Therefore, it is reasonable to believe that H is true.This scheme is valid and instances of it might well be sound. Inferences of this kind are employed in the common affairs of life, in detective stories, and in the sciences …

People object that the best available explanation might be false. Quite so – and so what? It goes without saying that any explanation might be false, in the sense that it is not necessarily true. It is absurd to suppose that the only things we can reasonably believe are necessary truths …

People object that being the best available explanation of a fact does not prove something to be true or even probable. Quite so – and again, so what? The explanationist principle – “It is reasonable to believe that the best available explanation of any fact is true” – means that it is reasonable to believe or think true things that have not been shown to be true or probable, more likely true than not.