Econometrics — the path from cause to effect [embedded content] In their book — Mastering ‘Metrics: The Path from Cause to Effect — Joshua D. Angrist and Jörn-Steffen Pischke write: Our first line of attack on the causality problem is a randomized experiment, often called a randomized trial. In a randomized trial, researchers change the causal variables of interest … for a group selected using something like a coin toss. By changing circumstances randomly, we make it highly likely that the variable of interest is unrelated to the many other factors determining the outcomes we want to study. Random assignment isn’t the same as holding everything else fixed, but it has the same effect. Random manipulation makes other things equal hold on average across the

Topics:

Lars Pålsson Syll considers the following as important: Statistics & Econometrics

This could be interesting, too:

Lars Pålsson Syll writes Keynes’ critique of econometrics is still valid

Lars Pålsson Syll writes The history of random walks

Lars Pålsson Syll writes The history of econometrics

Lars Pålsson Syll writes What statistics teachers get wrong!

Econometrics — the path from cause to effect

In their book — Mastering ‘Metrics: The Path from Cause to Effect — Joshua D. Angrist and Jörn-Steffen Pischke write:

Our first line of attack on the causality problem is a randomized experiment, often called a randomized trial. In a randomized trial, researchers change the causal variables of interest … for a group selected using something like a coin toss. By changing circumstances randomly, we make it highly likely that the variable of interest is unrelated to the many other factors determining the outcomes we want to study. Random assignment isn’t the same as holding everything else fixed, but it has the same effect. Random manipulation makes other things equal hold on average across the groups that did and did not experience manipulation. As we explain … ‘on average’ is usually good enough.

Angrist and Pischke may “dream of the trials we’d like to do” and consider “the notion of an ideal experiment” something that “disciplines our approach to econometric research,” but to maintain that ‘on average’ is “usually good enough” is an allegation that in my view is rather unwarranted, and for many reasons.

First of all it amounts to nothing but hand waving to simpliciter assume, without argumentation, that it is tenable to treat social agents and relations as homogeneous and interchangeable entities.

Randomization is used to basically allow the econometrician to treat the population as consisting of interchangeable and homogeneous groups (‘treatment’ and ‘control’). The regression models one arrives at by using randomized trials tell us the average effect that variations in variable X has on the outcome variable Y, without having to explicitly control for effects of other explanatory variables R, S, T, etc., etc. Everything is assumed to be essentially equal except the values taken by variable X.

Randomization is used to basically allow the econometrician to treat the population as consisting of interchangeable and homogeneous groups (‘treatment’ and ‘control’). The regression models one arrives at by using randomized trials tell us the average effect that variations in variable X has on the outcome variable Y, without having to explicitly control for effects of other explanatory variables R, S, T, etc., etc. Everything is assumed to be essentially equal except the values taken by variable X.

In a usual regression context one would apply an ordinary least squares estimator (OLS) in trying to get an unbiased and consistent estimate:

Y = α + βX + ε,

where α is a constant intercept, β a constant “structural” causal effect and ε an error term.

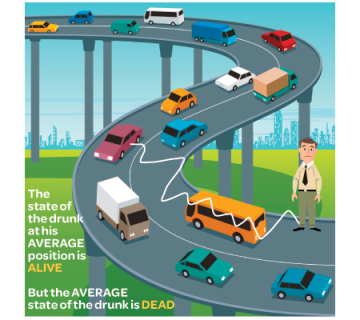

The problem here is that although we may get an estimate of the “true” average causal effect, this may “mask” important heterogeneous effects of a causal nature. Although we get the right answer of the average causal effect being 0, those who are “treated”( X=1) may have causal effects equal to – 100 and those “not treated” (X=0) may have causal effects equal to 100. Contemplating being treated or not, most people would probably be interested in knowing about this underlying heterogeneity and would not consider the OLS average effect particularly enlightening.

Limiting model assumptions in economic science always have to be closely examined since if we are going to be able to show that the mechanisms or causes that we isolate and handle in our models are stable in the sense that they do not change when we “export” them to our “target systems”, we have to be able to show that they do not only hold under ceteris paribus conditions and a fortiori only are of limited value to our understanding, explanations or predictions of real economic systems.

Real world social systems are not governed by stable causal mechanisms or capacities. The kinds of “laws” and relations that econometrics has established, are laws and relations about entities in models that presuppose causal mechanisms being atomistic and additive. When causal mechanisms operate in real world social target systems they only do it in ever-changing and unstable combinations where the whole is more than a mechanical sum of parts. If economic regularities obtain they do it (as a rule) only because we engineered them for that purpose. Outside man-made “nomological machines” they are rare, or even non-existant. Unfortunately that also makes most of the achievements of econometrics – as most of contemporary endeavours of mainstream economic theoretical modeling – rather useless.

Remember that a model is not the truth. It is a lie to help you get your point across. And in the case of modeling economic risk, your model is a lie about others, who are probably lying themselves. And what’s worse than a simple lie? A complicated lie.

Sam L. Savage The Flaw of Averages

When Joshua Angrist and Jörn-Steffen Pischke in an earlier article of theirs [“The Credibility Revolution in Empirical Economics: How Better Research Design Is Taking the Con out of Econometrics,” Journal of Economic Perspectives, 2010] say that

anyone who makes a living out of data analysis probably believes that heterogeneity is limited enough that the well-understood past can be informative about the future

I really think they underestimate the heterogeneity problem. It does not just turn up as an external validity problem when trying to “export” regression results to different times or different target populations. It is also often an internal problem to the millions of regression estimates that economists produce every year.

But when the randomization is purposeful, a whole new set of issues arises — experimental contamination — which is much more serious with human subjects in a social system than with chemicals mixed in beakers … Anyone who designs an experiment in economics would do well to anticipate the inevitable barrage of questions regarding the valid transference of things learned in the lab (one value of z) into the real world (a different value of z) …

Absent observation of the interactive compounding effects z, what is estimated is some kind of average treatment effect which is called by Imbens and Angrist (1994) a “Local Average Treatment Effect,” which is a little like the lawyer who explained that when he was a young man he lost many cases he should have won but as he grew older he won many that he should have lost, so that on the average justice was done. In other words, if you act as if the treatment effect is a random variable by substituting βt for β0 + β′zt, the notation inappropriately relieves you of the heavy burden of considering what are the interactive confounders and finding some way to measure them …

If little thought has gone into identifying these possible confounders, it seems probable that little thought will be given to the limited applicability of the results in other settings.

Evidence-based theories and policies are highly valued nowadays. Randomization is supposed to control for bias from unknown confounders. The received opinion is that evidence based on randomized experiments therefore is the best.

More and more economists have also lately come to advocate randomization as the principal method for ensuring being able to make valid causal inferences.

I would however rather argue that randomization, just as econometrics, promises more than it can deliver, basically because it requires assumptions that in practice are not possible to maintain.

Especially when it comes to questions of causality, randomization is nowadays considered some kind of “gold standard”. Everything has to be evidence-based, and the evidence has to come from randomized experiments.

But just as econometrics, randomization is basically a deductive method. Given the assumptions (such as manipulability, transitivity, separability, additivity, linearity, etc.) these methods deliver deductive inferences. The problem, of course, is that we will never completely know when the assumptions are right. And although randomization may contribute to controlling for confounding, it does not guarantee it, since genuine ramdomness presupposes infinite experimentation and we know all real experimentation is finite. And even if randomization may help to establish average causal effects, it says nothing of individual effects unless homogeneity is added to the list of assumptions. Real target systems are seldom epistemically isomorphic to our axiomatic-deductive models/systems, and even if they were, we still have to argue for the external validity of the conclusions reached from within these epistemically convenient models/systems. Causal evidence generated by randomization procedures may be valid in “closed” models, but what we usually are interested in, is causal evidence in the real target system we happen to live in.

When does a conclusion established in population X hold for target population Y? Only under very restrictive conditions!

Angrist’s and Pischke’s “ideally controlled experiments” tell us with certainty what causes what effects — but only given the right “closures”. Making appropriate extrapolations from (ideal, accidental, natural or quasi) experiments to different settings, populations or target systems, is not easy. “It works there” is no evidence for “it will work here”. Causes deduced in an experimental setting still have to show that they come with an export-warrant to the target population/system. The causal background assumptions made have to be justified, and without licenses to export, the value of “rigorous” and “precise” methods — and ‘on-average-knowledge’ — is despairingly small.