Ed Leamer’s Tantalus on the Road to Asymptopia is one of my favourite critiques of econometrics, and for the benefit of those who are not versed in the econometric jargon, this handy summary gives the gist of it in plain English: Most work in econometrics is — still — made on the assumption that the researcher has a theoretical model that is ‘true.’ Based on this belief of having a correct specification for an econometric model or running a regression, one proceeds as if the only problem remaining to solve has to do with measurement and observation. When things sound too good to be true, they usually aren’t. And that goes for econometric wet dreams too. The snag is, as Leamer convincingly argues, that there is pretty little to support the perfect specification assumption.

Topics:

Lars Pålsson Syll considers the following as important: Statistics & Econometrics

This could be interesting, too:

Lars Pålsson Syll writes Keynes’ critique of econometrics is still valid

Lars Pålsson Syll writes The history of random walks

Lars Pålsson Syll writes The history of econometrics

Lars Pålsson Syll writes What statistics teachers get wrong!

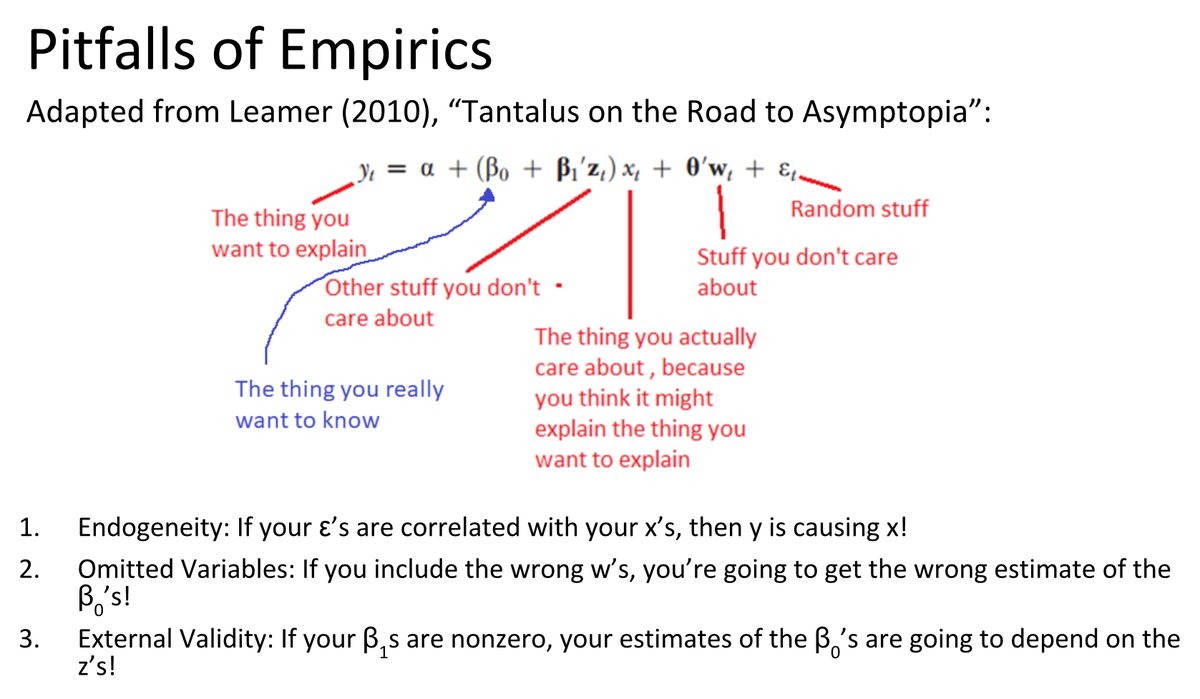

Ed Leamer’s Tantalus on the Road to Asymptopia is one of my favourite critiques of econometrics, and for the benefit of those who are not versed in the econometric jargon, this handy summary gives the gist of it in plain English:

Most work in econometrics is — still — made on the assumption that the researcher has a theoretical model that is ‘true.’ Based on this belief of having a correct specification for an econometric model or running a regression, one proceeds as if the only problem remaining to solve has to do with measurement and observation.

When things sound too good to be true, they usually aren’t. And that goes for econometric wet dreams too. The snag is, as Leamer convincingly argues, that there is pretty little to support the perfect specification assumption. Looking around in social science and economics we don’t find a single regression or econometric model that lives up to the standards set by the ‘true’ theoretical model — and there is pretty little that gives us reason to believe things will be different in the future.

To think that we are being able to construct a model where all relevant variables are included and correctly specify the functional relationships that exist between them is not only a belief without support but a belief impossible to support.

The theories we work with when building our econometric regression models are insufficient. No matter what we study, there are always some variables missing, and we don’t know the correct way to functionally specify the relationships between the variables.

Every regression model constructed is misspecified. There is always an endless list of possible variables to include, and endless possible ways to specify the relationships between them. So every applied econometrician comes up with his own specification and ‘parameter’ estimates. The econometric Holy Grail of consistent and stable parameter values is nothing but a dream.

In order to draw inferences from data as described by econometric texts, it is necessary to make whimsical assumptions. The professional audience consequently and properly withholds belief until an inference is shown to be adequately insensitive to the choice of assumptions. The haphazard way we individually and collectively study the fragility of inferences leaves most of us unconvinced that any inference is believable. If we are to make effective use of our scarce data resource, it is therefore important that we study fragility in a much more systematic way. If it turns out that almost all inferences from economic data are fragile, I suppose we shall have to revert to our old methods …

A rigorous application of econometric methods in economics really presupposes that the phenomena of our real-world economies are ruled by stable causal relations between variables. Parameter values estimated in specific spatio-temporal contexts are presupposed to be exportable to totally different contexts. To warrant this assumption one, however, has to convincingly establish that the targeted acting causes are stable and invariant so that they maintain their parametric status after the bridging. The endemic lack of predictive success of the econometric project indicates that this hope of finding fixed parameters is a hope for which there really is no other ground than hope itself.

The theoretical conditions that have to be fulfilled for regression analysis and econometrics to really work are nowhere even closely met in reality. Making outlandish statistical assumptions does not provide a solid ground for doing relevant social science and economics. Although regression analysis and econometrics have become the most used quantitative methods in social sciences and economics today, it’s still a fact that the inferences made from them are invalid.

Given the usual set of assumptions (such as manipulability, transitivity, separability, additivity, linearity, etc) econometrics delivers deductive inferences. The problem, of course, is that we will never completely know when the assumptions are right. Conclusions can only be as certain as their premises — and that also applies to econometrics.

Economists have inherited from the physical sciences the myth that scientific inference is objective, and free of personal prejudice. This is utter nonsense. All knowledge is human belief: more accurately, human opinion …

The false idol of objectivity has done great damage to economic science. Theoretical econometricians have interpreted scientific objectivity to mean that an economist must identify exactly the variables in the model, the functional form, and the distribution of the errors. Given these assumptions. and given a data set, the econometric method produces an objective inference from a data set, unencumbered by the subjective opinions of the researcher. This advice could be treated as ludicrous, except that it fills all the econometric textbooks.

Although ‘ideally controlled experiments’ may tell us with certainty what causes what effects, this is so only when given the right ‘closures.’ Making appropriate extrapolations from (ideal, accidental, natural or quasi) experiments to different settings, populations or target systems, is not easy. “It works there” is no evidence for “it will work here.” The causal background assumptions made have to be justified, and without licenses to export, the value of ‘rigorous’ and ‘precise’ methods used when analyzing ‘natural experiments’ is often despairingly small. Just to take one example — since the core assumptions on which instrumental variables analysis builds are NEVER directly testable, those of us who choose to use instrumental variables to find out about causality ALWAYS have to defend and argue for the validity of the assumptions the causal inferences build on. Especially when dealing with natural experiments, we should be very cautious when being presented with causal conclusions without convincing arguments about the veracity of the assumptions made. If you are out to make causal inferences you have to rely on a trustworthy theory of the data-generating process. The empirical results causal analysis supplies us with are only as good as the assumptions we make about the data-generating process. Garbage in, garbage out.