As social scientists — and economists — we have to confront the all-important question of how to handle uncertainty and randomness. Should we define randomness with probability? If we do, we have to accept that to speak of randomness we also have to presuppose the existence of nomological probability machines, since probabilities cannot be spoken of — and actually, to be strict, do not at all exist — without specifying such system-contexts. Accepting Haavelmo’s domain of probability theory and sample space of infinite populations — just as Fisher’s ‘hypothetical infinite population, of which the actual data are regarded as constituting a random sample,’ von Mises’s ‘collective’ or Gibbs’s ‘ensemble’ — also implies that judgments are made based on observations that are

Topics:

Lars Pålsson Syll considers the following as important: Statistics & Econometrics

This could be interesting, too:

Lars Pålsson Syll writes Keynes’ critique of econometrics is still valid

Lars Pålsson Syll writes The history of random walks

Lars Pålsson Syll writes The history of econometrics

Lars Pålsson Syll writes What statistics teachers get wrong!

As social scientists — and economists — we have to confront the all-important question of how to handle uncertainty and randomness. Should we define randomness with probability? If we do, we have to accept that to speak of randomness we also have to presuppose the existence of nomological probability machines, since probabilities cannot be spoken of — and actually, to be strict, do not at all exist — without specifying such system-contexts. Accepting Haavelmo’s domain of probability theory and sample space of infinite populations — just as Fisher’s ‘hypothetical infinite population, of which the actual data are regarded as constituting a random sample,’ von Mises’s ‘collective’ or Gibbs’s ‘ensemble’ — also implies that judgments are made based on observations that are actually never made!

Infinitely repeated trials or samplings never take place in the real world. So that cannot be a sound inductive basis for a science with aspirations of explaining real-world socio-economic processes, structures or events. It’s not tenable.

As David Salsburg once noted — in his lovely The Lady Tasting Tea — on probability theory:

[W]e assume there is an abstract space of elementary things called ‘events’ … If a measure on the abstract space of events fulfills certain axioms, then it is a probability. To use probability in real life, we have to identify this space of events and do so with sufficient specificity to allow us to actually calculate probability measurements on that space … Unless we can identify [this] abstract space, the probability statements that emerge from statistical analyses will have many different and sometimes contrary meanings.

Just as e. g. John Maynard Keynes and Nicholas Georgescu-Roegen, Salsburg is very critical of the way social scientists – including economists and econometricians – uncritically and without arguments have come to simply assume that one can apply probability distributions from statistical theory in their own area of research:

Probability is a measure of sets in an abstract space of events. All the mathematical properties of probability can be derived from this definition. When we wish to apply probability to real life, we need to identify that abstract space of events for the particular problem at hand … It is not well established when statistical methods are used for observational studies … If we cannot identify the space of events that generate the probabilities being calculated, then one model is no more valid than another … As statistical models are used more and more for observational studies to assist in social decisions by government and advocacy groups, this fundamental failure to be able to derive probabilities without ambiguity will cast doubt on the usefulness of these methods.

This importantly also means that if you cannot show that data satisfies all the conditions of the probabilistic nomological machine — including e. g. the distribution of the deviations corresponding to a normal curve — then the statistical inferences used, lack sound foundations.

In his great book Statistical Models and Causal Inference: A Dialogue with the Social Sciences David Freedman also touched on these fundamental problems, arising when you try to apply statistical models outside overly simple nomological machines like coin tossing and roulette wheels:

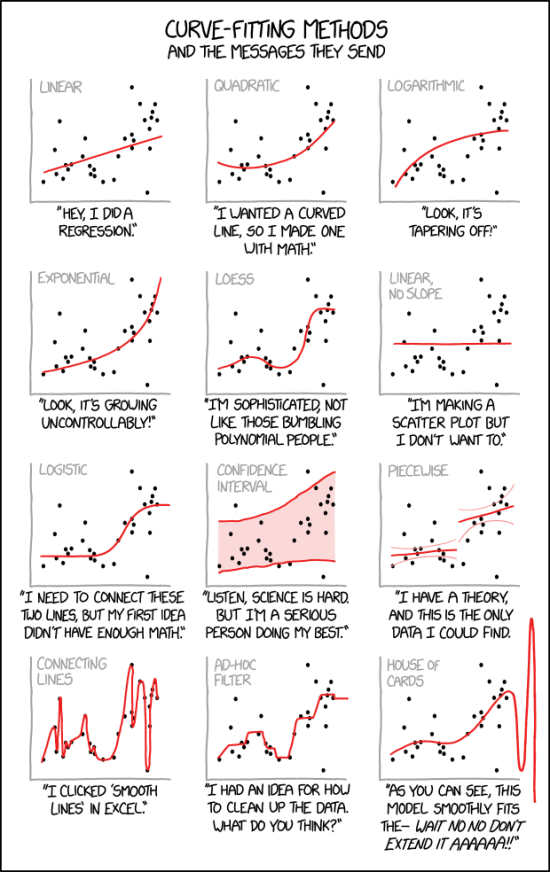

Lurking behind the typical regression model will be found a host of assumptions; without them, legitimate inferences cannot be drawn from the model. There are statistical procedures for testing some of these assumptions. However, the tests often lack the power to detect substantial failures. Furthermore, model testing may become circular; breakdowns in assumptions are detected, and the model is redefined to accommodate. In short, hiding the problems can become a major goal of model building …

Regression models are widely used by social scientists to make causal inferences; such models are now almost a routine way of demonstrating counterfactuals. However, the “demonstrations” generally turn out to depend on a series of untested, even unarticulated, technical assumptions. Under the circumstances, reliance on model outputs may be quite unjustified. Making the ideas of validation somewhat more precise is a serious problem in the philosophy of science. That models should correspond to reality is, after all, a useful but not totally straightforward idea – with some history to it. Developing appropriate models is a serious problem in statistics; testing the connection to the phenomena is even more serious …

In our days, serious arguments have been made from data. Beautiful, delicate theorems have been proved, although the connection with data analysis often remains to be established. And an enormous amount of fiction has been produced, masquerading as rigorous science.

And as if this wasn’t enough, one could also seriously wonder what kind of ‘populations’ these statistical and econometric models ultimately are based on. Why should we as social scientists — and not as pure mathematicians working with formal-axiomatic systems without the urge to confront our models with real target systems — unquestioningly accept Haavelmo’s ‘infinite population,’ Fisher’s ‘hypothetical infinite population,’ von Mises’s ‘collective’ or Gibbs’s ‘ensemble’?

Of course, one could treat our observational or experimental data as random samples from real populations. I have no problem with that. However probabilistic econometrics does not content itself with that kind of population. Instead, it creates imaginary populations of ‘parallel universes’ and assumes that our data are random samples from that kind of population.

Of course, one could treat our observational or experimental data as random samples from real populations. I have no problem with that. However probabilistic econometrics does not content itself with that kind of population. Instead, it creates imaginary populations of ‘parallel universes’ and assumes that our data are random samples from that kind of population.

But this is actually nothing else but hand-waving! And it is inadequate for real science. As David Freedman writes in Statistical Models and Causal Inference:

With this approach, the investigator does not explicitly define a population that could in principle be studied, with unlimited resources of time and money. The investigator merely assumes that such a population exists in some ill-defined sense. And there is a further assumption, that the data set being analyzed can be treated as if it were based on a random sample from the assumed population. These are convenient fictions … Nevertheless, reliance on imaginary populations is widespread. Indeed regression models are commonly used to analyze convenience samples … The rhetoric of imaginary populations is seductive because it seems to free the investigator from the necessity of understanding how data were generated.