Cholesky decomposition (student stuff) [embedded content] Advertisements

Read More »The problem with unjustified assumptions

The problem with unjustified assumptions An ongoing concern is that excessive focus on formal modeling and statistics can lead to neglect of practical issues and to overconfidence in formal results … Analysis interpretation depends on contextual judgments about how reality is to be mapped onto the model, and how the formal analysis results are to be mapped back into reality. But overconfidence in formal outputs is only to be expected when much labor has...

Read More »Textbooks problem — teaching the wrong things all too well

Textbooks problem — teaching the wrong things all too well It is well known that even experienced scientists routinely misinterpret p-values in all sorts of ways, including confusion of statistical and practical significance, treating non-rejection as acceptance of the null hypothesis, and interpreting the p-value as some sort of replication probability or as the posterior probability that the null hypothesis is true … It is shocking that these errors seem...

Read More »Monte Carlo simulation on p-values (wonkish)

Monte Carlo simulation on p-values (wonkish) In many social sciences p values and null hypothesis significance testing (NHST) are often used to draw far-reaching scientific conclusions – despite the fact that they are as a rule poorly understood and that there exist altenatives that are easier to understand and more informative. Not the least using confidence intervals (CIs) and effect sizes are to be preferred to the Neyman-Pearson-Fisher mishmash approach...

Read More »Factor analysis — like telling time with a stopped clock

Factor analysis — like telling time with a stopped clock Exploratory factor analysis exploits correlations to summarize data, and confirmatory factor analysis — stuff like testing that the right partial correlations vanish — is a prudent way of checking whether a model with latent variables could possibly be right. What the modern g-mongers do, however, is try to use exploratory factor analysis to uncover hidden causal structures. I am very, very interested...

Read More »Cleaning p-values

The one place that preregistration is really needed … is if you want clean p-values. A p-value is very explicitly a statement about how you would’ve analyzed the data, had they come out differently. Sometimes when I’ve criticized published p-values on the grounds of forking paths, the original authors have fought back angrily, saying how unfair it is for me to first make an assumption about what they would’ve done under different conditions, and then make conclusions based on...

Read More »Some methodological perspectives on statistical inference in economics

Some methodological perspectives on statistical inference in economics Causal modeling attempts to maintain this deductive focus within imperfect research by deriving models for observed associations from more elaborate causal (‘structural’) models with randomized inputs … But in the world of risk assessment … the causal-inference process cannot rely solely on deductions from models or other purely algorithmic approaches. Instead, when randomization is...

Read More »Simpson’s paradox, Trump voters and the limits of econometrics

Simpson’s paradox, Trump voters and the limits of econometrics [embedded content] From a more theoretical perspective, Simpson’s paradox importantly shows that causality can never be reduced to a question of statistics or probabilities, unless you are — miraculously — able to keep constant all other factors that influence the probability of the outcome studied. To understand causality we always have to relate it to a specific causal structure. Statistical...

Read More »Keynes’ devastating critique of econometrics

Keynes’ devastating critique of econometrics Mainstream economists often hold the view that Keynes’ criticism of econometrics was the result of a sadly misinformed and misguided person who disliked and did not understand much of it. This is, however, nothing but a gross misapprehension. To be careful and cautious is not the same as to dislike. Keynes did not misunderstand the crucial issues at stake in the development of econometrics. Quite the contrary. He...

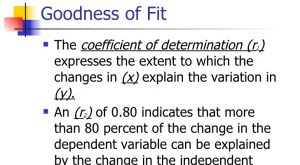

Read More »Goodness of fit

Which independent variables should be included in the equation? The goal is a “good fit” … How can a good fit be recognized? A popular measure for the satisfactoriness of a regression is the coefficient of determination, R2. If this number is large, it is said, the regression gives a good fit … Nothing about R2 supports these claims. This statistic is best regarded as characterizing the geometric shape of the regression points and not much more. The central difficulty with R2...

Read More » Heterodox

Heterodox