James Heckman — ‘Nobel prize’ winner gone wrong Here’s James Heckman in 2013: “Also holding back progress are those who claim that Perry and ABC are experiments with samples too small to accurately predict widespread impact and return on investment. This is a nonsensical argument. Their relatively small sample sizes actually speak for — not against — the strength of their findings. Dramatic differences between treatment and control-group outcomes are...

Read More »Understanding the limits of statistical inference

Understanding the limits of statistical inference [embedded content] This is indeed an instructive video on what statistical inference is all about. But we have to remember that economics and statistics are two quite different things, and as long as economists cannot identify their statistical theories with real-world phenomena there is no real warrant for taking their statistical inferences seriously. Just as there is no such thing as a ‘free lunch,’...

Read More »Econometric alchemy

Thus we have “econometric modelling”, that activity of matching an incorrect version of [the parameter matrix] to an inadequate representation of [the data generating process], using insufficient and inaccurate data. The resulting compromise can be awkward, or it can be a useful approximation which encompasses previous results, throws light on economic theory and is sufficiently constant for prediction, forecasting and perhaps even policy. Simply writing down an “economic...

Read More »Heterogeneity and the flaw of averages

Heterogeneity and the flaw of averages With interactive confounders explicitly included, the overall treatment effect β0 + β′zt is not a number but a variable that depends on the confounding effects. Absent observation of the interactive compounding effects, what is estimated is some kind of average treatment effect which is called by Imbens and Angrist (1994) a “Local Average Treatment Effect,” which is a little like the lawyer who explained that when he...

Read More »What is a statistical model?

What is a statistical model? My critique is that the currently accepted notion of a statistical model is not scientific; rather, it is a guess at what might constitute (scientific) reality without the vital element of feedback, that is, without checking the hypothesized, postulated, wished-for, natural-looking (but in fact only guessed) model against that reality. To be blunt, as far as is known today, there is no such thing as a concrete i.i.d....

Read More »Simpson’s paradox

[embedded content] From a more theoretical perspective, Simpson’s paradox importantly shows that causality can never be reduced to a question of statistics or probabilities, unless you are — miraculously — able to keep constant all other factors that influence the probability of the outcome studied. To understand causality we always have to relate it to a specific causal structure. Statistical correlations are never enough. No structure, no causality. Simpson’s paradox is an...

Read More »Logistic regression (student stuff)

Logistic regression (student stuff) [embedded content] And in the video below (in Swedish) yours truly shows how to perform a logit regression using Gretl:[embedded content] div{float:left;margin-right:10px;} div.wpmrec2x div.u > div:nth-child(3n){margin-right:0px;} ]]> Advertisements

Read More »Causality matters!

[embedded content] Causality in social sciences — and economics — can never solely be a question of statistical inference. Causality entails more than predictability, and to really in depth explain social phenomena require theory. Analysis of variation — the foundation of all econometrics — can never in itself reveal how these variations are brought about. First when we are able to tie actions, processes or structures to the statistical relations detected, can we say that we...

Read More »Proving gender discrimination using randomization (student stuff)

Proving gender discrimination using randomization (student stuff) [embedded content] div{float:left;margin-right:10px;} div.wpmrec2x div.u > div:nth-child(3n){margin-right:0px;} ]]> Advertisements

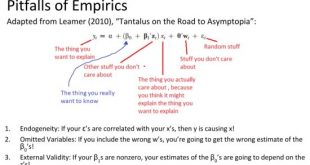

Read More »Ed Leamer and the pitfalls of econometrics

Ed Leamer and the pitfalls of econometrics Ed Leamer’s Tantalus on the Road to Asymptopia is one of my favourite critiques of econometrics, and for the benefit of those who are not versed in the econometric jargong, this handy summary gives the gist of it in plain English: Most work in econometrics and regression analysis is — still — made on the assumption that the researcher has a theoretical model that is ‘true.’ Based on this belief of having a...

Read More » Heterodox

Heterodox